AI/Chat GPT in teaching and research

A Webinar by the CENTRAL Network on Artificial Intelligence and academic teaching and research with expert Dr. Bernhard Knapp from the UAS Technikum Wien (Vienna)

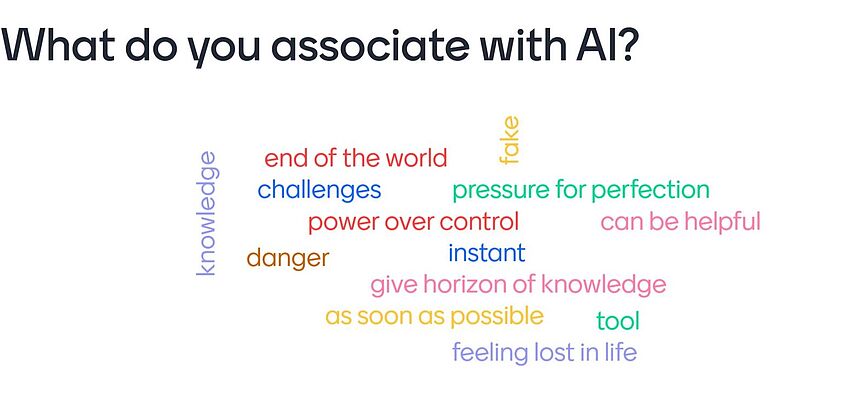

Wordcloud created with participants at the beginning of the webinar

The CENTRAL Network sets out to address current topics. Last year in June 2022, the network organized a panel discussion on “War in Ukraine: a turning point for the relationship between Central Europe and Russia?". This year, CENTRAL has set out to launch a webinar series on the topic of, Artificial Intelligence (AI) and how programs like Chat GPT are significantly impacting academic teaching and learning. The goal at each event is to not only to have experts speak on different areas, but to engage with the participants to create an overview of the issues and concerns that arise. These webinars are designed to create space to engage with experts on the matter and discuss possible solutions as well as collect thoughts and concerns in order to expand our understanding.

On the 25th of April Dr. Bernhard Knapp from the University of Applied Science Technikum Vienna, who is program director for the department of AI engineering, took the time to talk to us on the subject of "AI/Chat GPT in teaching and research". After a very informative keynote by the expert, various questions were posed and discussed at length. Of course, it is difficult to find a one-fits-all answer to the subject but developing a strategy around AI is a process that takes time and effort.

Here are some thoughts on the topics discussed:

What is AI?

AI is not a thinking intelligent thing as such. It is, simply put, a tool that relies solely on the input it gets and on how humans design and code it. The first versions of Chat GPT was using information from the internet to provide an answer to any question or, more accurately, to finish a sentence with the most probable phrase. AI has no “motivation” on its own and can only produce output with data received in the past. It cannot predict future outcomes or create anything truly new. It may appear to do so and to have a “self”. Consequently, and by nature, it cannot be defined as “good” or “bad”. To produce an output large language models (LLM) like Chat GPT, which are predefined by humans, uses a certain number of inputs to predict the next word or phrase a human would most likely use.

One could argue that these versions of AI are more a mirror of the (online) society than a representation of a suggested AI-character. And therein lies the problem with the prototype versions of Chat GPT and other LLMs. The pattern it has developed is based on datamining written opinions, remarks, comments, etc. available on the internet. However, as we all know, the internet is full of (false) information, questionable views, hidden contexts, etc., which risk compromising the LLM learning outcomes. This problem was addressed with supervised learning methods to trim and train the program via large-scale click farms. This method involves hiring people to “train” Chat GPT on what is more “helpful” to reply to certain requests. The people involved used the raw version of Chat GPT and gave feedback to each reply. In this process they didn’t provide a recommendation of a full “correct” answer. They only voted output up or down and therefor approaching a more “desired” result.

Of course, there was only an estimate or much rather a general assumption of “better output“. One of the axioms used for the training was: All humans are born equal! These ideas were “taught” to the Chatbot. In context, this raises a lot more questions: What were the motivations for these people? What were the basic set of values? How did they decide which replies to vote down or vote up?

To discuss such subjects, it would have led the webinar more into the topic of Philosophy and terminology: What is “intelligence”? What is “right” or “wrong”? What is “better”?

How do we know if a text is written by AI?

Short answer: we can’t! If running a text, created by Chat GPT Version 4, through a conventional plagiarism scan, there is almost always a passable percentage, highlighted as copied material. When using advanced AI scans, there can be many possibilities. It may be determined that no AI was used. Or only a small percentage is detected as AI generated, even if all the text was generated by Chat GPT. It is also possible that sentences are identified as written by AI that originate from an author. (False positives)

Watermarks set into the AI code itself to reference back to AI are more reliable as it is difficult to remove these watermarks. However, there are work arounds that can make it impossible to retrace the source.

AI detectors will keep improving in tandem to the improvement of AI creators.

How do we deal with it?

Unfortunately, there is no definitive answer to this question yet. To put it in simple terms: We need to adjust to the new possibilities, this widely accessible technology creates. Using Chat GPT for research and the development of ideas can be useful but only if it is referenced in the final product. Of course, plagiarism is still illegal in academia. So how can students be graded fairly for their work, if there is no way to definitively identify, what is cheating in the end?

Again, there is no simple answer. However, two approaches were presented by the keynote speaker during this webinar:

1: Create new output

Focus on having students create new work and their own contributions if that makes sense in their academic fields. Evaluate these contributions and give them more value. Introduction, literature review, summarising, etc. should count for little to nothing as these parts can easily be created by Chat GPT.

2: Defend your work

In person oral exams and defences of the papers and other work produced by students is another approach, that could counter the misuse of AI for academic work. Defending your work by answering questions about it, can be a method used to make sure the output was created by the student themselves.

There is a need to find more creative ways to evaluate the knowledge of students. Transparency on how to manage these challenges is key.

___________

We would like to thank our expert Dr. Knapp, for taking the time to share his knowledge with us!

Also, we would like to thank the audience and all who participated in the discussion.

This summary is conducted by the technical coordinators of the CENTRAL Network.

If you have any requests or concerns, please be so kind as to contact us at central-network@univie.ac.at

Don’t miss any CENTRAL events, you can sign up for the CENTRAL Newsletter here